There are endless arguments and opinions about how many calls to assess per agent per month. I suspect there’s a sort of consensus that you ought to review about 8 calls per agent per month. Some contact centers reduce that number for tenured agents who have posted good quality scores in the past.

There’s another answer though, and it’s based on the hard mathematics of statistics.

The field of statistics is a fascinating area of study. With statistical formulae and processes you can tease interesting information out of basic data about the population you happen to be studying. One well-understood process has to do with sample size.

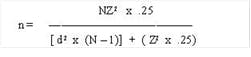

There is a formula in statistics to determine how many samples should be in your study before you can draw conclusions with confidence. In statistics, the higher the confidence the more accurate will be the conclusions you can draw about the study population. The formula is:

In this formula, “n” is the sample size required. “N” is the size of the population under study. “d” is the precision level desired. Typically, good surveys use a 95% confidence level, so “d” would be equal to 0.05. Sometimes a confidence level of 90% is good enough to draw defensible conclusions, and “d” would be equal to 0.1. Finally, “Z” is the number of standard deviation units of the sample distribution that corresponds to the desired confidence level. “Z” equates to 1.6440 when the confidence level is 90% and equates to 1.96 when the confidence level is 95%.

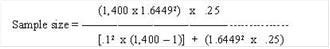

Let’s apply the formula to determine what the sample size of quality monitoring sessions ought to be before we can draw defensible conclusions about performance. Suppose an agent can handle 70 calls per day. Five days per week and four weeks per month means that the agent will have handled 1,400 conversations. This is the population we wish to study. It is the “N” in our formula. So, if we take the path of least resistance and only solve for a confidence level of 90%, the formula becomes:

Sample size = 64.5

You read that right. We need to conduct 65 quality monitoring sessions per agent per month in order to draw reasonably accurate conclusions about agent performance that are defensible. When you conduct far fewer than 65 quality monitoring sessions per agent, you run a growing risk that the quality score assigned could be wrong by a wide margin. In fact, the agent may actually be performing at a much higher or much lower score.

The risks associated with missed customer expectations are too great to leave quality scores to random chance scoring quirks. It really is time for the accepted norms of current quality monitoring to be cast aside. Readily available technologies exist that can enable the practical evaluation of hundreds of calls per agent.